We had created a VG already here, we are going to use that VG to create some LVs or filesystems on it.

At first going to look for the VG size and free space available, vgdisplay and vgs commands will do that for us,

Here we find that only one VG is available on the server. If there are many VGs and if you want the details of the particular VG, you can use vgs or vgdisplay .

Currently i do not have any LVs available on the server apart from the root / filesystem.

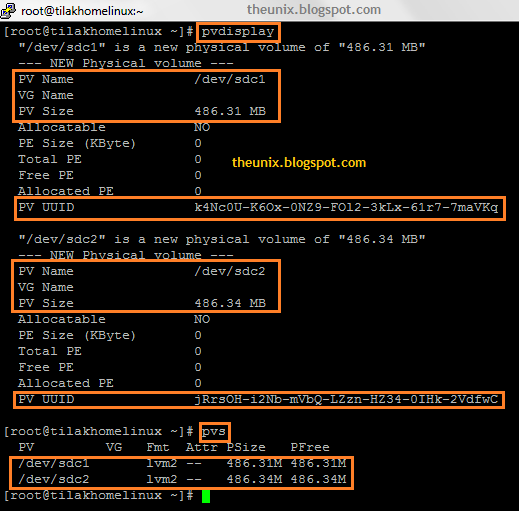

We also have other useful commands to verify PV, VG and LV (pvscan ; vgscan ; lvscan), just for additional information.

Ooops ok.. thanks for your patience, lets start creating LVs.

There are several methods of creating a LV, which will be discussed later. The most commonly used default method of creating LV as below,

Syntax:

lvcreate -L -n

Example:

lvcreate -L 100M -n newLV1 newVG

So, now the LV is created successfully.

To verify,

So now we had created an LV successfully and verified.

Now the next step is to format the filesystem before mounting it to a mount point.

The device can formated in many different types, latest redhat/centOS versions has ext4.

Command used is mkfs.ext4.

The version which i use, do not have ext4 type format. So we are going to format it with ext3.

To format the LV, we need to know the complete path of the LV device

created. Which can be seen in lvdisplay and lvscan outputs from the previous and

below screen shots in this post.

Note the "newLV1" linked to "/dev/mapper/newVG-newLV1" which will be the one will be used as the device path for the mount point, even if you use /dev/newVG/newLV1. This can be identified in "mount -l" output after mounting it to a mount point.

Current "mount -l' output

Also the formatted filesystem type will also be verified with the "mount -l" command only after mounting it to a mount point.

Lets start formatting the LV device and mount it to a mount point.

Syntax:

mkfs.ext3

Example:

mkfs.ext3 /dev/newVG/newLV1

Create a mount point, make a directory which is going to be a mountpoint for this LV. Which can be any path and located any where in the server.

I am going to create it in / directory itself. Which is /newFS.

mkdir /newFS

Mounting it to the created directory, which is called as the mount point.

mount /dev/newVG/newLV1 /newFS

Verify with df -h before and after mounting the filesystem, also with "mount -l".

After Mounting, from "mount -l" outptut.

/dev/mapper/newVG-newLV1 on /newFS type ext3 (rw)

So now successfully mounted the created filesystem. One last and final step is to we need to add this mount point and filesystem details in /etc/fstab for automount while booting.

Verifying for the current /etc/fstab entry.

Edit the /etc/fstab file and add the new mountpoint as below.

That ends the entire LVM.

Quick Recap of complete LVM